Crawler

Crawler is developed to improve FPC hit/miss rate. It also known as Cache Warmer. Crawler will provide each visitor with perfect shopping experience.

Contents

Features

- Ability to create unlimited crawlers count with different settings

- Crawler works in concurrent requests, which makes it superfast

- Site perfomance protection:

- Crawler will be paused automatically while site load time is higher then maximum allowed average load time.

- Cron will run up to 2 crawlers at the same time

- Reports about slow page loading and error response codes are stored for site administrator

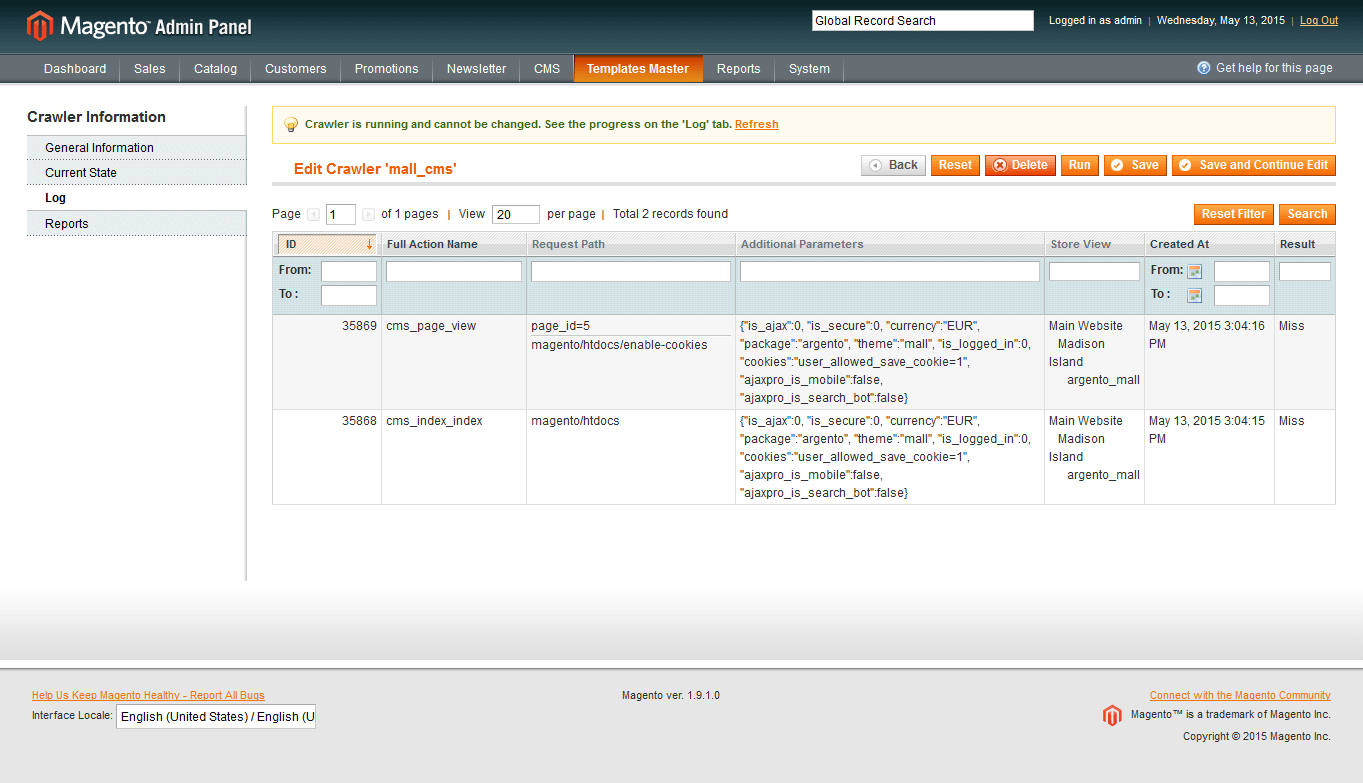

- Ability to run crawler from backend interface manually

- Real time crawler’s log

Settings

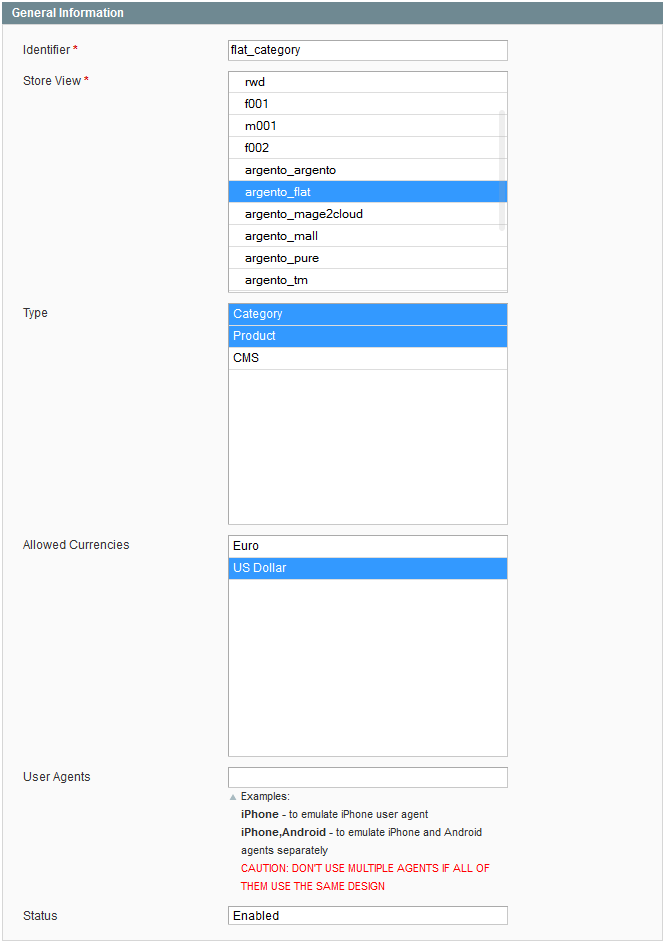

General section

Store View

Tells the crawler which store views to process

Crawler Type

Allows you to select set of urls that will be crawled (Multiple sets are allowed to select too). There are following crawler types available:

- Product

- Category

- Cms

Currencies

Make the crawler to process defined currencies. If you have different available currencies per store view, the crawler will handle them automatically and process only available currencies per each store view.

User Agents

Useful if you are using theme exceptions for some devices and want to crawl them too.

Example 1:

You are using ArgentoFlat theme as main theme and MobileStar for iPhone and Android. In this case you need to set the value of User Agents field to

iPhone. Please note that you should not add theAndroid, because Android will use the same design as iPhone use.

Example 2:

You are using ArgentoFlat theme as main theme, ArgentoArgento for Chrome browser and MobileStar for iPhone and Android. In this case you need to set the value of User Agents field to

Chrome,iPhone. Please note that you should not add theAndoid, because Andoid will use the same design as iPhone use.

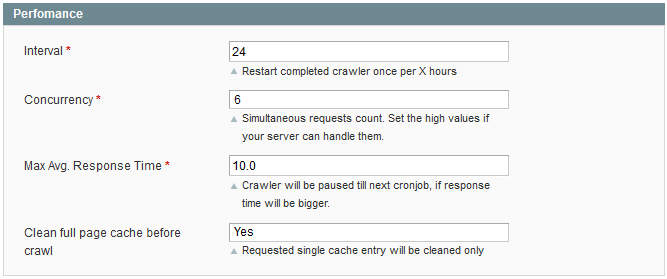

Perfomance section

This section allows you to tune the crawler’s performance.

Interval

After crawler completes the job it would wait for X hours to begin the work again.

Concurrency

Count of concurrent requests spawned by crawler. You can choose from 2 up to 20 threads to use.

Max. Average Response Time

Crawler will paused till next cronjob if average load time will exceed the limit.

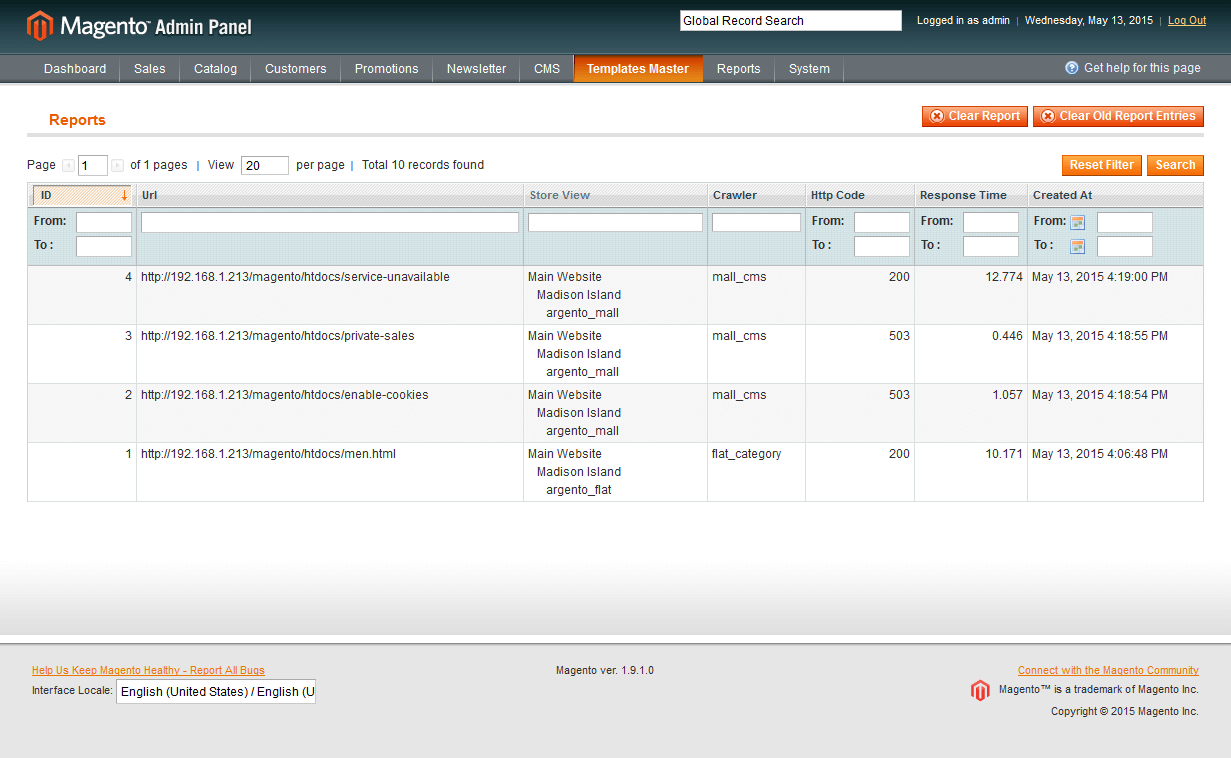

Reports

Reports are used to store all slow and unsuccessfull requests. They may be used as a flag that you should tune up a crawler to decrease the server load.

Log

You may see the real time crawler’s log by using corresponding tab.